“Alexa, where are the cats?”

As is well documented, I have a significant number of feline overlords who thanks to multiple Sure Petcare cat flaps can come and go as they please. The new Connected range of cat flaps comes with a web site, iOS app (a thin skin over the same web site) and is built using what appears to be a pretty solid RESTful API. A few months ago I spent a bit of time monitoring the web app to reverse engineer bits of the API, and then built an Alexa skill so that I could ask my house where all the cats are.

The end result works like this:

If you only have one cat flap, the standard Sure Petcare API will let you know if your cat is in or out, since it only has one entrance/exit to monitor. My house has three cat flaps – one grants access to the garden room – entirely separate from the house – another to the garage, and the third from the garage into the house. So the standard API saying “inside” could mean house, garage or garden room, and saying “outside” could mean they’re in the garage or have actually gone outside.

To solve this, a bit of spelunking in the API.

The API

First steps: discover the API and see what information was available.

I wasn’t the first crazy cat geek to head down this road, a quick Google found that over on GitHub Alex Toft had put together a few PHP scripts to play with the API. That was enough to start with – but as I’m playing on Windows and am very comfortable throwing PowerShell around I ported and extended them into collection of PowerShell scripts. These let me tweak the parameters and make sure I was requesting the objects I needed.

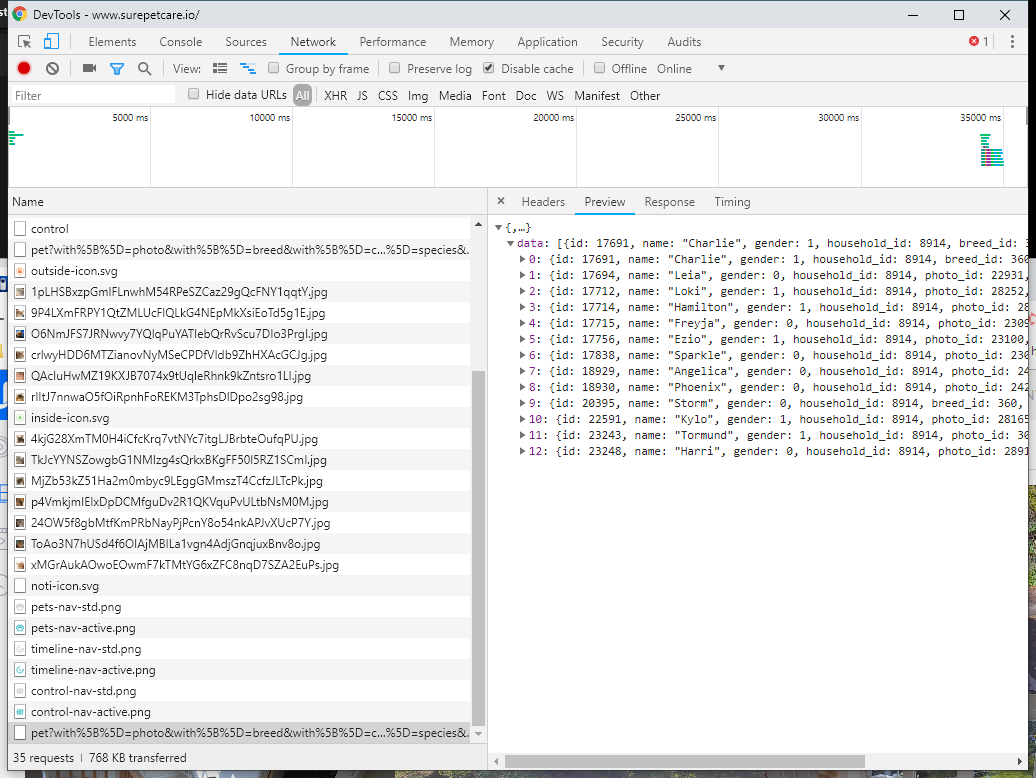

The other part of API discovery was actually using the web app that runs at surepetcare.io. It is a robust and sensibly structured API, and the web app makes good use of it. Simply using Chrome’s developer tools I was able to watch the web app GET, POST, PUT and DELETE objects and understand how to get all the data I wanted.

The Logic

And so to the questions I wanted to answer – the goal being to ask Alexa sensible questions about the cats whereabouts and get human-like answers. I settled on a few things I wanted to know:

- Where is <specific cat>?

- Who is in <specific location>?

- Who is in/out?

- When did <specific cat> go out/come in?

- Who has been in/out longest?

Also, because I keep forgetting, I wanted to ask:

- How old is <specific cat>?

And so they don’t get stuck:

- How are the batteries in the cat flaps?

I later added the ability to set a cat’s location for when they’ve sneaked in or out through a door or window:

- <specific cat> is in/out.

Alexa

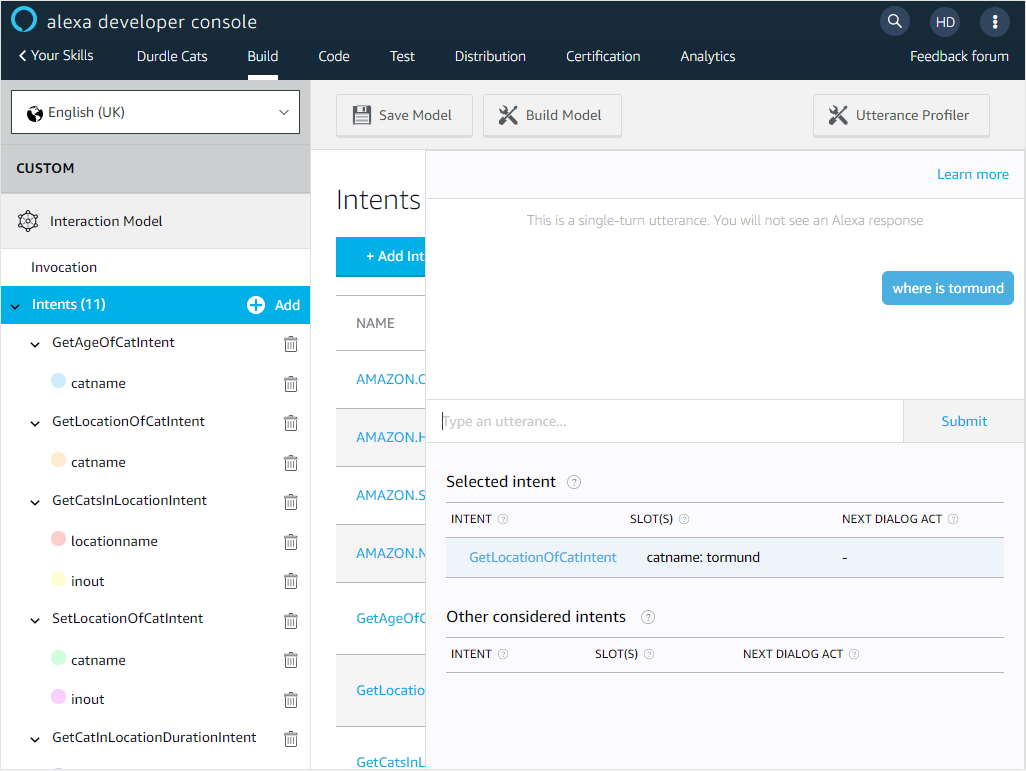

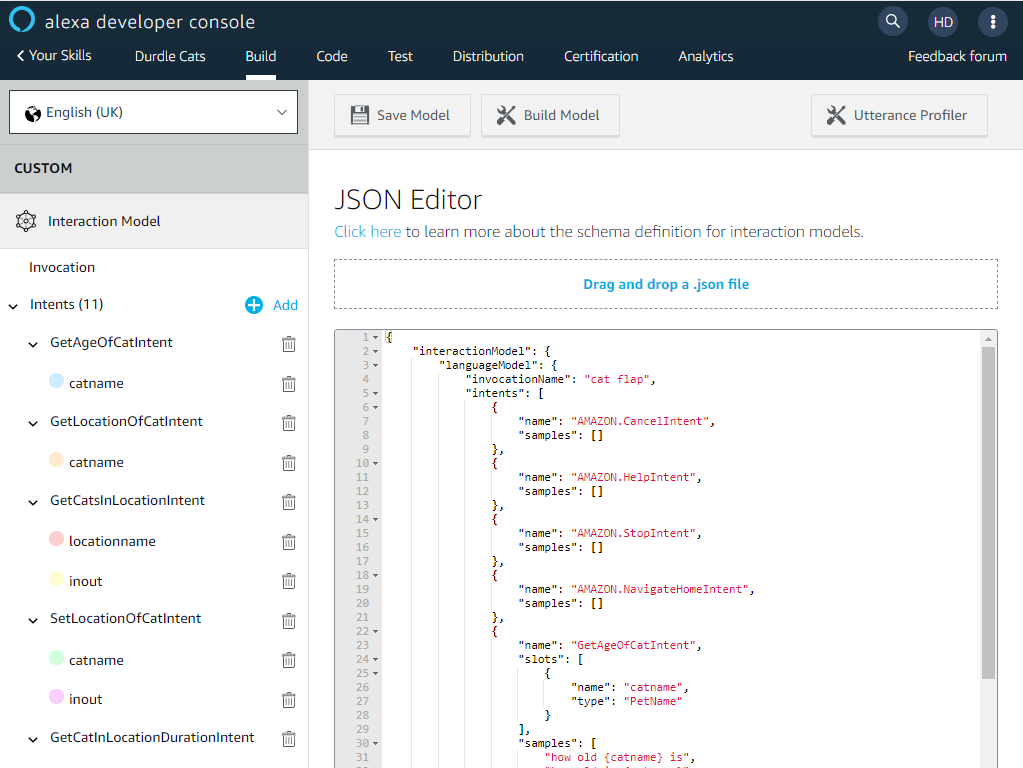

In Alexa skill parlance, all those questions we want to ask or things we want to do are “intents”. The placeholders for cats, places, locations are called “slots” and the example phrases we might say are “utterances”. Together these elements form the interaction model for a skill.

With an Amazon developer account you can create a Custom Alexa Skill and attach it to the Echo devices in your home. They also have a Test tab in the developer console where you can type your queries at it for testing.

I opted for node.js for the backend – this will run well in a minimal Docker container on a system at home – giving me the ability to script a reliable repeatable build of the system. It gives me an http server running on any port I like, which I can expose to the Internet via a reverse SSL proxy.

A lot of the heavy lifting for talking to Alexa is done by alexa-app-server and alexa-app. The former acts as a host for one or more alexa apps, the latter is the framework we’ll use to put our interaction model to work.

Here’s a simple intent from the node.js code:

alexaApp.intent('GetAgeOfCatIntent', {

"slots": {

"catname": "PetName"

},

"utterances": [

"how old is {catname}",

"how old {catname} is"

]

},

async function (req, res) {

const catName = getMatchedCat(req);

const catDetail = catdobs.find(x => x.name === catName);

const speech = getAgeSpeechForCat(catDetail);

res.say(speech);

res.send();

}

);

You can see that the slots and utterances are defined up front, along with the intent name. By convention (it seems) you name these ThingYouWantToDoIntent but as long as they match up to the intent defined in the interaction model on the alexa developer console it doesn’t really matter.

This is called by saying “Alexa, ask cat flap how old is Tormund?” or “Alexa, ask cat flap how old Tormund is?”. Custom skills like this have to be prefixed with a two word trigger phrases which itself is prefixed with ask or tell. For example I can say

Alexa, tell cat flap Tormund is in.

This is about as simple as it gets – we extract the catname from the slot (via a helper function that unpacks the request object) do a look up on some data, then build a fragment of speech to send back.

Once you understand this pattern it becomes quite easy to throw together simple alexa skills. The alexa-app package also makes life easier by letting you set up all this stuff programatically, and then it will generate a json file that represents the interaction model to be loaded into the developer console.

Visiting the endpoint of the skill in a browser performs a standard GET request and returns a test page as well as copies of the JSON needed.

Using the Code

Grab a copy of the code from GitHub, and create a config.json based on the -dist copy in the repo, and put your SureFlap token in it.

You’ll also need to add your Sureflap household ID, and the topology of your catflaps. You can query all these with my Powershell scripts, or use Chrome’s dev tools to monitor logging in to your own instance. I recommend doing this anyway to really familiarise yourself with how the web app consumes and manipulates the data. Fire it up with:

npm start

By default the app starts up and exposes a page on http://localhost:8080/alexa/catflap which will list the information you need to populate the Alexa Skill (intents, utterances, slots).

Cat Flap Topology

As I mentioned, I have multiple cat flaps which have a unique topology. If you have just one flap you won’t need to do anything, but more than one and you can define the flaps, and where they lead (which rooms/zones they connect).

Edit config.json and add items to the flaps array for each pet flap you have. The format is:

{

"id": device_id,

"in": "location-inbound",

"out": "location-outbound",

"name": "name-of-catflap"

},

for example:

{

"id": 123456,

"in": "garden room",

"out": "outside",

"name": "garden room"

},

You need to leave the first "id": 0 item. This makes sure the system works when you’ve manually set pet’s inside/outside state. The logic of where a cat is for multiple flaps works based on the device_id of the last cat flap a cat walked through – the topology in the config lets us know where a cat ends up for each flap. When a cat walks through a flap this device_id is attached to the cat object. If you set a cats location as “inside” or “outside” via the app (or this skill) that device_id is not present. So we have to have a generic in/out location.

All this code is on GitHub, including the example interaction model with all my cat names and aliases in. If you have an Alexa device and at least one cat flap you have everything you need to make this work. I’d love to see what people can do with it. Talk to me on Twitter if you do something interesting!

Enjoy Reading This Article?

Here are some more articles you might like to read next: